What Does Tcp Do to the Time-out Period if the Round Trip Time Decreases?

| By plotting a 3D plot of node versus fourth dimension versus response nosotros tin can expect for correlations of several nodes having poor performance or beingness unreachable at the same time (maybe due to a common crusade), or a given node having poor response or existence unreachable for an extended fourth dimension. To the left is an case showing several hosts (in black) all being unreachable around 12 noon. |

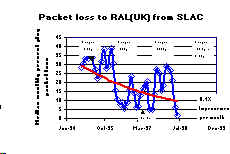

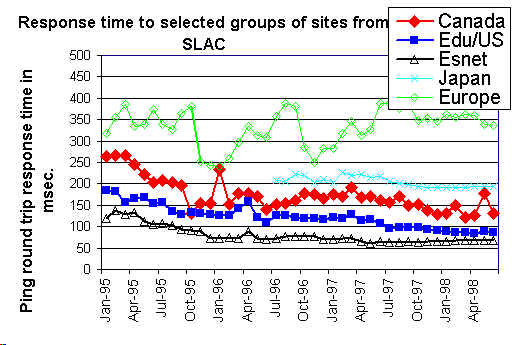

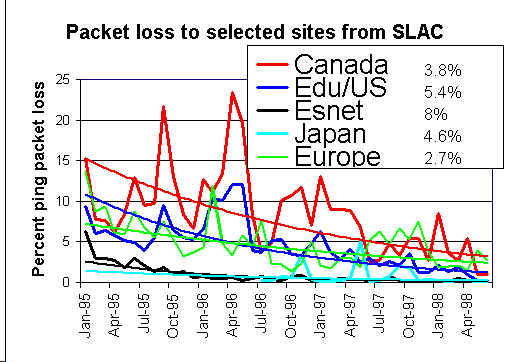

| Tables of monthly medians of the prime time (7am- 7pm weekday) k byte ping response fourth dimension and 100 byte ping bundle loss allow us to view the data going back for longer periods. This tabular data can be exported to Excel and charts made of the long term ping packet loss functioning. |  |

Ane method requires injecting packets at regular intervals into the network and measuring the variability in the arrival time. The IETF has IP Packet Delay Variation Metric for IP Performance Metrics (IPPM) (come across also RTP: A Transport Protocol for Real-Time Applications, RFC 2679 and RFC 5481

We measure the instantaneous variability or "jitter" in two ways.

- Permit the i-th measurement of the round trip fourth dimension (RTT) exist Ri , and so we have the "jitter" every bit being the Inter Quartile Range (IQR) of the frequency distribution of R. Meet the SLAC <=> CERN circular trip filibuster for an example of such a distribution.

- In the 2d method we extend the IETF draft on Instantaneous Packet Filibuster Variation Metric for IPPM, which is a one-mode metric, to 2-way pings. We have the IQR of the frequency distribution of dR, where dRi =Ri-Ri-1 . Note that when calculating dR the packets exercise not have to be side by side. See the SLAC <=> CERN ii-way instantaneous packet delay variation for an instance of such a distribution.

By viewing the Ping "jitter" between SLAC and CERN, DESY & FNAL it tin exist seen that the two methods of calculating jitter rails one some other well (the first method is labelled IQR and the second labelled IPD in the effigy). They vary by two orders of magntitude over the day. The jitter between SLAC & FNAL is much lower than betwixt SLAC and DESY or CERN. It is likewise noteworthy that CERN has greater jitter during the European daytime while DESY has greater jitter during the U.Southward. daytime.

We have also obtained a measure out of the jitter by taking the absolute value dR, i.due east. |dR|. This is sometimes referred to as the "moving range method" (run into Statistical Design and Analysis of Experiments, Robert L. Mason, Richard F. Guest and James Fifty. Hess. John Wiley & Sons, 1989). Information technology is also used in RFC 2598 as the definition of jitter (RFC 1889 has another definition of jitter for real fourth dimension apply and adding) Run into the Histogram of the moving range for an example. In this figure, the magenta line is the cumulative full, the bluish line is an exponentail fit to the data, and the green line is a power series fit to the data. Note that all iii of the charts in this section on jitter are representations of identical data.

In order to more closely understand the requirements for VoIP and in particular the impacts of applying Quality of Service (QoS) measures, we have ready a VoIP testbed between SLAC and LBNL. A rough schematic is shown to the right. Just the SLAC half circuit is shown in the schematic, the LBNL terminate is like. A user can lift the phone connected to the PBX at the SLAC finish and call another user on a telephone at LBNL via the VoIP Cisco router gateway. The gateway encodes, compresses etc. the voice stream into IP packets (using the G.729 standard) creating roughly 24kbps of traffic. The VoIP stream include both TCP (for signalling) and UDP packets. The connectedness from the ESnet router to the ATM cloud is a 3.5 Mbps ATM permanent virtual excursion (PVC). With no competing traffic on the link, the call connects and the conversation proceeds commonly with expert quality. And then we inject four Mbps of traffic onto the shared 10 Mbps Ethernet that the VoIP router is connected to. At this stage, the VoIP connection is cleaved and no further connections tin be fabricated. Nosotros then used the Edge router'south Committed Access Rate (Auto) feature to characterization the VoIP packets' by setting Per Hop Behavior (PHB) bits. The ESnet router is and so set up to employ the Weighted Fair Queuing (WFQ) feature to expedite the VoIP packets. In this setup voice connections tin can again exist fabricated and the conversation is over again of good quality.

In order to more closely understand the requirements for VoIP and in particular the impacts of applying Quality of Service (QoS) measures, we have ready a VoIP testbed between SLAC and LBNL. A rough schematic is shown to the right. Just the SLAC half circuit is shown in the schematic, the LBNL terminate is like. A user can lift the phone connected to the PBX at the SLAC finish and call another user on a telephone at LBNL via the VoIP Cisco router gateway. The gateway encodes, compresses etc. the voice stream into IP packets (using the G.729 standard) creating roughly 24kbps of traffic. The VoIP stream include both TCP (for signalling) and UDP packets. The connectedness from the ESnet router to the ATM cloud is a 3.5 Mbps ATM permanent virtual excursion (PVC). With no competing traffic on the link, the call connects and the conversation proceeds commonly with expert quality. And then we inject four Mbps of traffic onto the shared 10 Mbps Ethernet that the VoIP router is connected to. At this stage, the VoIP connection is cleaved and no further connections tin be fabricated. Nosotros then used the Edge router'south Committed Access Rate (Auto) feature to characterization the VoIP packets' by setting Per Hop Behavior (PHB) bits. The ESnet router is and so set up to employ the Weighted Fair Queuing (WFQ) feature to expedite the VoIP packets. In this setup voice connections tin can again exist fabricated and the conversation is over again of good quality.

One can also measure out the frequency of outage lengths using active probes and noting the time duration for which sequential probes do not get through.

Ano/ther metric that is sometimes used to betoken the availability of a telephone excursion is Mistake-free seconds. Some measurements on this can be constitute in Error complimentary seconds between SLAC, FNAL, CMU and CERN.

There is also an IETF RFC on Measuring Connectivity and a certificate on A Mod Taxonomy of Loftier Availability which may be useful.

In the to a higher place plot, the loss and response fourth dimension are measured during SLAC prime time (7am - 7pm, weekdays), the other measures are for all the time.

- The loss rates are plotted as a bar graph above the y=0 axis and are for 100 byte payload ping packets. Horizontal lines are indicated at bundle losses of ane%, v% and 12% at the boundaries of the connexion qualities defined above.

- The response time is plotted as a bluish line on a log axis, labelled to the right, and is the round trip time for g byte ping payload packets.

- The host unreachability is plotted as a bar graph negatively extending from the y=0 axis. A host is accounted unreachable at a 30 minute interval if information technology did not respond to any of the 21 pings made at that 30 minute interval.

- The host unpredictability is plotted in green here every bit a negative value, tin range from 0 (totally unpredictable) to 1 (highly predictable) and is a measure of the variability of the ping response time and loss during each 24 hr mean solar day. It is defined in more detail in Ping Unpredictability.

- ESnet hosts in general have good packet loss (median 0.79%). The average packet losses for the other groups varies from virtually iv.v% (N. America Due east) to 7.vii% (International). Typically 25%-35% of the hosts in the non-ESnet groups are in the poor to bad range.

- The response time for ESnet hosts averages at about 50ms, for N. America Wes it is most 80ms, for N. America East about 150ms and for International hosts around 200ms.

- Almost of the unreachable bug are limited to a few hosts mainly in the International group (Dresden, Novosibirsk, Florence).

- The unpredictability is nigh marked for a few International hosts and roughly tracks the parcel loss.

Filibuster

The scarcest and nearly valuable commodity is fourth dimension. Studies in late 1970s and early 1980s by Walt Doherty of IBM and others showed the economic value of Rapid Response Time:| 0-0.4s | Loftier productivity interactive response |

| 0.4-2s | Fully interactive regime |

| ii-12s | Sporadically interactive authorities |

| 12s-600s | Break in contact authorities |

| 600s | Batch regime |

There is a threshold around 4-5s where complaints increase rapidly. For some newer Internet applications there are other thresholds, for example for voice a threshold for one way filibuster appears at about 150ms (come across ITU Recommendation K.114 One-fashion transmission fourth dimension, Feb 1996) - beneath this 1 can have toll quality calls, and above that point, the delay causes difficulty for people trying to accept a conversation and frustration grows.

For keeping time in music, Stanford researchers found that the optimum amount of latency is 11 milliseconds. Below that filibuster and people tended to speed upwards. Above that delay and they tend to deadening down. After about 50 millisecond (or 70), performances tended to completely fall autonomously.

The human ear perceives sounds as simultaneous but if they are heard inside xx ms of each other, come across http://www.mercurynews.com/News/ci_27039996/Music-at-the-speed-of-light-is-researchers-goal

For real-time multimedia (H.323) Operation Measurement and Assay of H.323 Traffic gives ane way filibuster (roughly a factor two to get RTT), of: 0-150ms = Good, 150-300ms=Accceptable, and > 300ms = poor.

The SLA for 1-way network latency target for Cisco TelePresence is beneath 150 msec. This does not include latency induced by encoding and decoding at the CTS endpoints.

All packets that comprise a frame of video must exist delivered to the TelePresence end point before the replay buffer is depleted. Otherwise degradation of the video quality tin can occur. The peak-to-elevation jitter target for Cisco TelePresence is under x msec.

The paper on The Cyberspace at the Speed of Light gives several examples of the importance of reducing the RTT. Examples include search engines such as Google and Bing, Amazon sales and the stock exchange

For real time haptic control and feedback for medical operations, Stanford researchers (see Shah, A., Harris, D., & Gutierrez, D. (2002). "Performance of Remote Anatomy and Surgical Training Applications Under Varied Network Conditions." World Conference on Educational Multimedia, Hypermedia and Telecommunications 2002(1), 662-667 ) institute that a one way delay of <=80msec. was needed.

The Net Atmospheric condition map identifies as bad any links with delays over 300ms.

Loss

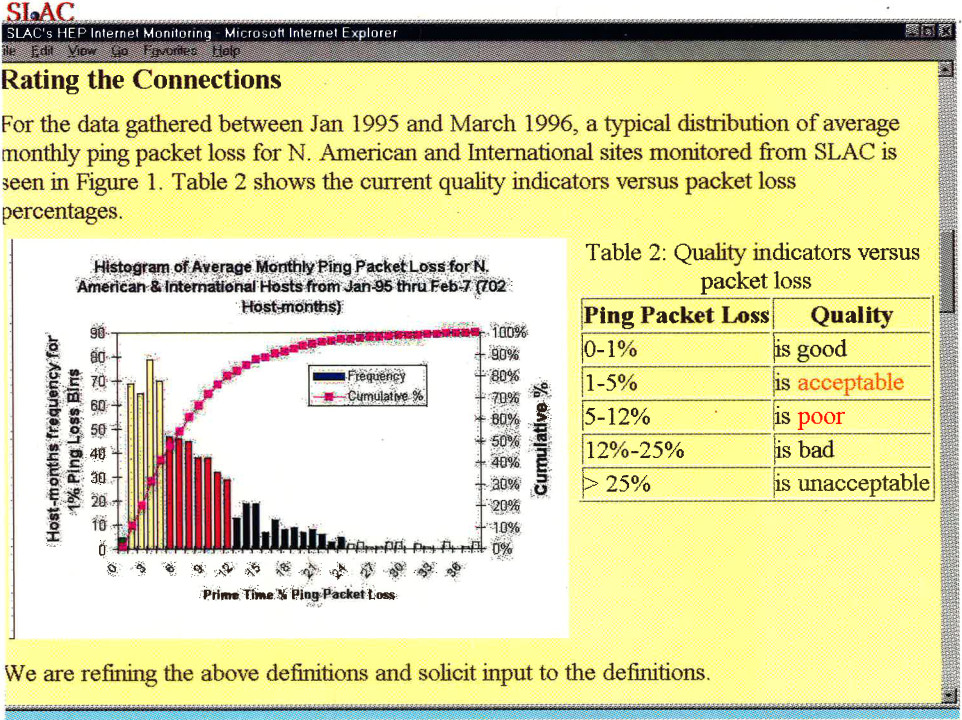

For the quality label we have focussed mainly on the packet losses. Our observations have been that above 4-6% packet loss video conferencing becomes irritating, and not native language speakers become unable to communicate. The occurence of long delays of 4 seconds or more at a frequency of 4-v% or more is also irritating for interactive activities such as telnet and X windows. Above ten-12% packet loss at that place is an unacceptable level of dorsum to back loss of packets and extremely long timeouts, connections start to become broken, and video conferencing is unusable (besides meet The issue of useless packet manual for multimedia over the internet , where they say at page 10 "we conclude that for this video stream, the video quality is unintelligible when packet loss rates exceeds 12%". On the other hand MSF (Multi Service Forum) officials said equally a result of tests up on adjacent-generation networks for IPTV "testing showed that even one half of 1% of packet loss in a video stream can make the video quality unacceptable to finish users" (see Computerworld, Oct 29, 2008 ). Originally the quality levels for packet loss were set at 0-1% = good, 1-5% = acceptable, 5-12% = poor, and greater than 12% = bad.

More recently, we have refined the levels to 0-0.1% first-class, 0.one-1% = good, 1-2.5% = acceptable, 2.5-v% = poor, 5%-12% = very poor, and greater than 12% = bad. Changing the thresholds reflects changes in our emphasis, i.e. in 1995 we were primarily exist concerned with email and ftp. This quote from Vern Paxson sums upwardly the main concern at the fourth dimension: Conventional wisdom among TCP researchers holds that a loss rate of 5% has a significant adverse effect on TCP performance, considering information technology will greatly limit the size of the congestion window and hence the transfer rate, while 3% is often substantially less serious. In other words, the circuitous behaviour of the Cyberspace results in a pregnant alter when packet loss climbs above 3%. In 2000 nosotros were likewise concerned with X-window applications, web performance, and package video conferencing. By 2005 nosotros were interested in the real-time requirements of VoIP and are starting to wait at voice over IP. As a rule, packet loss in VoIP (and VoFi) should never exceed one pct, which substantially means ane voice skip every three minutes. DSP algorithms may recoup for upwardly to xxx ms of missing data; whatsoever more than this, and missing sound will be noticeable to listeners. The Automotive Network substitution (ANX) sets the threshold for packet loss charge per unit (see ANX / Auto Linx Metrics) to be less than 0.1%.

The ITU TIPHON working grouping (come across General aspects of Quality of Service (QOS), DTR/TIPHON-05001 V1.2.5 (1998-09) technical Written report) has besides defined < 3% package loss as being good, > fifteen% for medium deposition, and 25% for poor degradation, for Internet telephony. It is very hard to give a single value beneath which package loss gives satisfactory/acceptable/good quality interactive voice. There are many other variables involved including: delay, jitter, Packet Loss Concealment (PLC), whether the losses are random or bursty, the pinch algorithm (heavier compression uses less bandwidth but there is more sensitivity to package loss since more data is contained/lost in a single packet). See for example Report of 1st ETSI VoIP Speech Quality Test Upshot, March 21-18, 2001, or Speech Processing, Transmission and Quality Aspects (STQ); Anonymous Examination Report from 2nd Speech Quality Test Event 2002 ETSI TR 102 251 v1.1.1 (2003-10) or ETSI 3rd Spoken language Quality Examination Event Summary report, Conversational Oral communication Quality for VoIP Gateways and IP Telephony.

Jonathan Rosenberg of Lucent Technology and Columbia Academy in Yard.729 Error Recovery for Internet Telephony presented at the V.O.N. Conference 9/1997 gave the following table showing the relation betwixt the Mean Opinion Score (MOS) and consecutive packets lost.

| Consecutive frames lost | 1 | two | three | 4 | v |

|---|---|---|---|---|---|

| Thousand.O.S. | 4.2 | iii.2 | 2.iv | ii.1 | one.7 |

| Rating | Spoken language Quality | Level of distortion |

|---|---|---|

| 5 | Excellent | Imperceptible |

| iv | Practiced | Just perceptible, not abrasive |

| 3 | Fair | Perceptible, slightly annoying |

| two | Poor | Annoying but non objectionale |

| i | Unsatisfactory | Very annoying, objectionable |

Then we gear up "Acceptable" packet loss at < 2.5%. The newspaper Performance Measurement and Analysis of H.323 traffic gives the following for VoIP (H.323): Loss = 0%-0.5% Skilful, = 0.5%-1.5% Acceptable and > 1.5% = Poor.

The higher up thresholds assumes a flat random parcel loss distribution. However, often the losses come in bursts. In order to quantify consecutive parcel loss we accept used, amidst other things, the Provisional Loss Probability (CLP) defined in Characterizing End-to-end Packet Delay and Loss in the Internet by J. Bolot in the Journal of High-Speed Networks, vol 2, no. iii pp 305-323 December 1993 (it is also available on the web). Basically the CLP is the probablility that if one parcel is lost the post-obit packet is as well lost. More formally Conditional_loss_probability = Probability(loss(bundle northward+1)=true | loss(packet north) = true). The causes of such bursts include the convergence time required later on a routing change (10s to 100s of seconds), loss and recovery of sync in DSL network (10-twenty seconds), and bridge spanning tree re-configurations (~30 seconds). More on the touch on of of bursty packet loss tin can be found in Voice communication Quality Impact of Random vs. Bursty Package losses by C. Dvorak, internal ITU-T document. This newspaper shows that whereas for random loss the driblet off in MOS is linear with % packet loss, for bursty losses the fall off is much faster. Also see Packet Loss Burstiness. The drib off in MOS is from 5 to 3.25 for a change in package loss from 0 to 1% so it is linear falling off to an MOS of well-nigh 2.5 by a loss of 5%.

Other monitoring efforts may cull different thresholds possibly because they are concerned with different applications. MCI's traffic folio labelled links as dark-green if they have packet loss < five%, reddish if > 10% and orangish in between. The Internet Weather Report we colored < vi% loss equally dark-green and > 12% as blood-red, and orange otherwise. So they are both more forgiving than we or or at least have less granularity. Gary Norton in Network World December. 2000 (p 40), says "If more than 98% of the packets are delivered, the users should experience only slightly degraded response time, and sessions should not time out".

The figure below shows the frequency distributions for the average monthly packet loss for virtually 70 sites seen from SLAC betwixt January 1995 and November 1997.

Due to the high amount of compression and motion-compensated prediction utilized by TelePresence video codecs, even a small amount of packet loss can result in visible deposition of the video quality. The SLA for package loss target for Cisco TelePresence should be beneath 0.05 percent on the network.

For existent time haptic control and feedback for medical operations, Stanford researchers found that loss was not a critical factor and losses up to ten% could be tolerated.

However for high performance data throughput over long distances (high RTTs), as can be seen in ESnet's article on Packet Loss, losses of every bit petty as 0.0046% (i package loss in 22,000) on 10Gbps links with the MTU fix at 9000Bytes (the bear upon is greater with default MTU's of 1500Bytes) outcome in factors of 10 reduction in throughput for RTTs > 10msec.

Jitter

The ITU TIPHON working group (see General aspects of Quality of Service (QoS) DTR/TIPHON-05001 V1.2.5 (1998-09) technical report) defines four categories of network degradation based on one-style jitter. These are:| Deposition category | Tiptop jitter |

|---|---|

| Perfect | 0 msec. |

| Proficient | 75 msec. |

| Medium | 125 msec. |

| Poor | 225 msec. |

Web browsing and post are fairly resistent to jitter, simply any kind of streaming media (voice, video, music) is quite suceptible to Jitter. Jitter is a symptom that there is congestion, or not enough bandwidth to handle the traffic.

The jitter specifies the length of the VoIP codec playout buffers to prevent over- or under-period. An objective could be to specify that say 95% of parcel delay variations should exist within the interval [-30msec, +30msec].

For real-time multimedia (H.323) Functioning Measurement and Assay of H.323 Traffic gives for one way: jitter = 0-20ms = Proficient, jitter = 20-50ms = adequate, > 50ms = poor. We measure circular-trip jitter which is roughly two times the one way jitter.

For existent time haptic command and feedback for medical operations, Stanford researchers institute that jitter was disquisitional and jitters of < 1msec were needed.

Throughput

Performance requirements (from AT&T)- 768k - 1.5Mbps: sharing photos, downloading music, emailing, web surfing.

- three.0Mbps - half-dozen.0Mbps - streaming video, online gaming, dwelling networking.

- > 6Mbps - hosting websites, watching TV online, downloading movies.

- The post-obit is from Pattern Considerations for Cisco Telepresence over a PIN Architecture. The bandwidth utilized per Cisco TelePresence endpoint varies based on factors that include the model deployed, the desired video resolution, interoperability with legacy video conferencing systems, and whether high or low speed auxiliary video input is deployed for the document camera or slide presentation. For example, when deploying 1080p best video resolution with high speed auxiliary video input and interoperability, the bandwidth requirement can be equally high equally 20.4 Mbps for a CTS-3200 and CTS-3000, or 10.8 Mbps for a CTS-1000 and CTS-500.

- FCC Broadband Guide

Utilization

Link utilization can be read out from routers via SNMP MIBs (bold ane has the authorization to read out such information). "At effectually 90% utilization a typical network will discard 2% of the packets, but this varies. Depression-bandwidth links accept less breadth to handle bursts, oftentimes discarding packets at just 80% utilization... A consummate network health bank check should measure out link chapters weekly. Here'due south a suggested color code:- Scarlet: Bundle discard > ii %, deploy no new application.

- AMBER: Utilization > threescore%. Consider a network upgrade.

- Greenish: Utilization < 60%. Adequate for new application deployment."

"Queuing theory suggests that the variation in round trip time, o, varies proportional to 1/(1-L) where L is the electric current network load, 0<=L<=1. If an Cyberspace is running at 50% capacity, we wait the round trip delay to vary by a factor of +-2o, or 4. When the load reaches eighty%, we expect a variation of 10." Internetworking with TCP/IP, Principle, Protocols and Architecture, Douglas Comer, Prentice Hall. This suggests 1 may exist able to get a measure out of the utilization by looking at the variability in the RTT. We accept not validated this suggestion at this fourth dimension.

Reachability

The Bellcore Generic Requirement 929 (GR-929-CORE Reliability and Quality Measurements for Telecommunications Systems (RQMS) (Wireline), is actively used by suppliers and service providers as the basis of supplier reporting of quarterly performance measured against objectives. Each year, post-obit publication of the well-nigh contempo issue of GR-929-CORE, such revised performance objectives are implemented) indicates that the the core of the phone network aims for a 99.999% availability, That translates to less than five.3 minutes of downtime per yr. As written the measurement does not include outages of less than 30 seconds. This is aimed at the current PSTN digital switches (such as Electronic Switching System 5 (5ESS) and the Nortel DMS250), using todays voice-over-ATM technology. A public switching system is required to limit the total outage time during a 40-year menstruum to less than two hours, or less than iii minutes per year, a number equivalent to an availability of 99.99943%. With the convergence of data and voice, this means that data networks that will bear multiple services including voice must start from similar or better availability or cease-users will be annoyed and frustrated.

Levels of availability are often cast into a Service Level Agreements. The table below (based on Cahners In-Stat survey of a sample of Awarding Service Providers (ASPs)) shows the levels of availability offered by ASPs and the levels chosen by customers.

| | Levels offered | Chosen by client |

|---|---|---|

| Less than 99% | 26% | 19% |

| 99% availability | 39% | 24% |

| 99.ix% availability | 24% | 15% |

| 99.99% availability | 15% | 5% |

| 99.999% availability | xviii% | 5% |

| More than 99.999% availability | 13% | fifteen% |

| Don't know | 13% | eighteen% |

| Weighted average of availability offered | 99.five% | 99.4% |

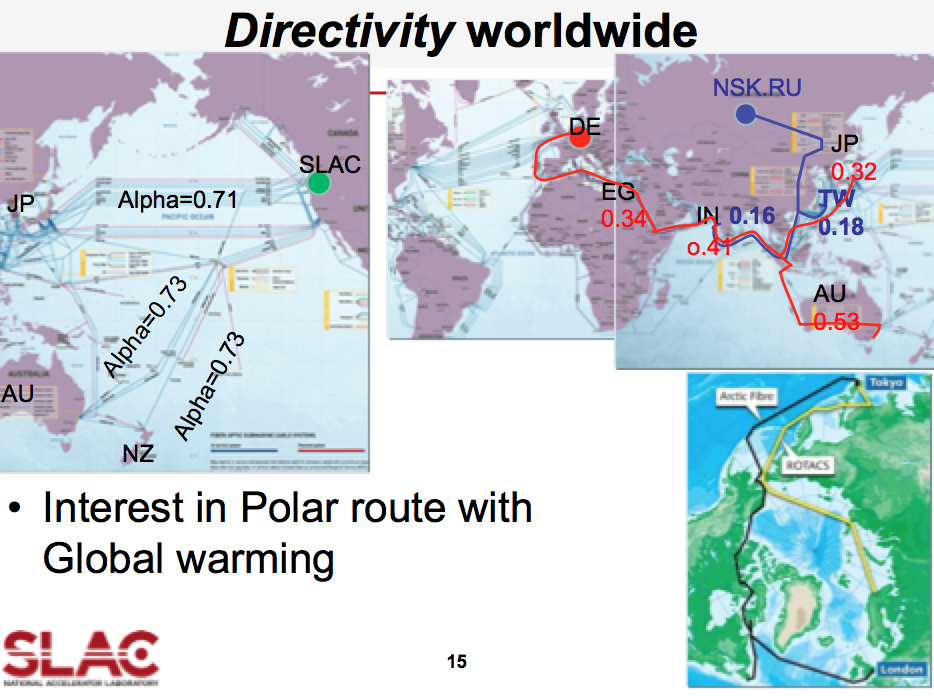

Directivity

The theorteical limits on Directivity are that information technology must exist ≥ 0 and ≤ 1. A value of 1 indicates the route is a corking circumvolve route and the only delay is due to the speed of light in fibre or electrons in copper. Values > 1 usually indicate the source or destination or both accept incorrect locations then make Directivity a useful diagnostic for host locations. Typical values of Directivity between research and educational sites in the US, Canada, Europe, Eastern asia, and Commonwealth of australia/New Zealand vary from 0.fifteen - 0.75 with a median of about 0.4. This corresponds to about 4 times slower than the speed of light in vacuum. Low values of Directivity typically hateful very indirect route, or satellite or slow connection (east.1000. wireless)

- past expanse (eastward.k. N. America, W. Europe, Japan, Asia, country, elevation level domain);

- by host pair separation (east.g. trans-oceanic links, intercontinental links, Internet eXchange Points);

- Network Service Provider courage that the remote site is connected to (east.thou. ESnet, Internet 2, DANTE ...);

- common interest affiliation (e.yard. XIWT, HENP, experiment collaboration such as BaBar, the European or DOE National Laboratories, ESnet plan interests, perfSONAR)

- past monitoring site;

- ane remote site seen from many monitoring sites. We need to be able to select thr groupings by monitoring sites and by remote sites. As well we demand the adequacy to includes all members of a group, to bring together groups and to exclude members of a group.

At the same time it is critical to choose the remote sites and host-pairs carefully so that they are representative of the data one is hoping to find out. We have therefore selected a prepare of about l "Beacon Sites" which are monitored by all monitoring sites and which are representative of the various affinity groups we are interested in. An example of a graph showing ping response times for groups of sites is seen below:

The percentages shown to the correct of the fable of the package lost chart are the improvements (reduction in package loss) per month for exponential trend line fits to the bundle loss information. Notation that a 5%/calendar month improvement is equivalent to 44%/year improvement (e.g. a x% loss would drib to 5.6% in a year).

Source: https://www.slac.stanford.edu/comp/net/wan-mon/tutorial.html

0 Response to "What Does Tcp Do to the Time-out Period if the Round Trip Time Decreases?"

Post a Comment